# Grafbase Documentation

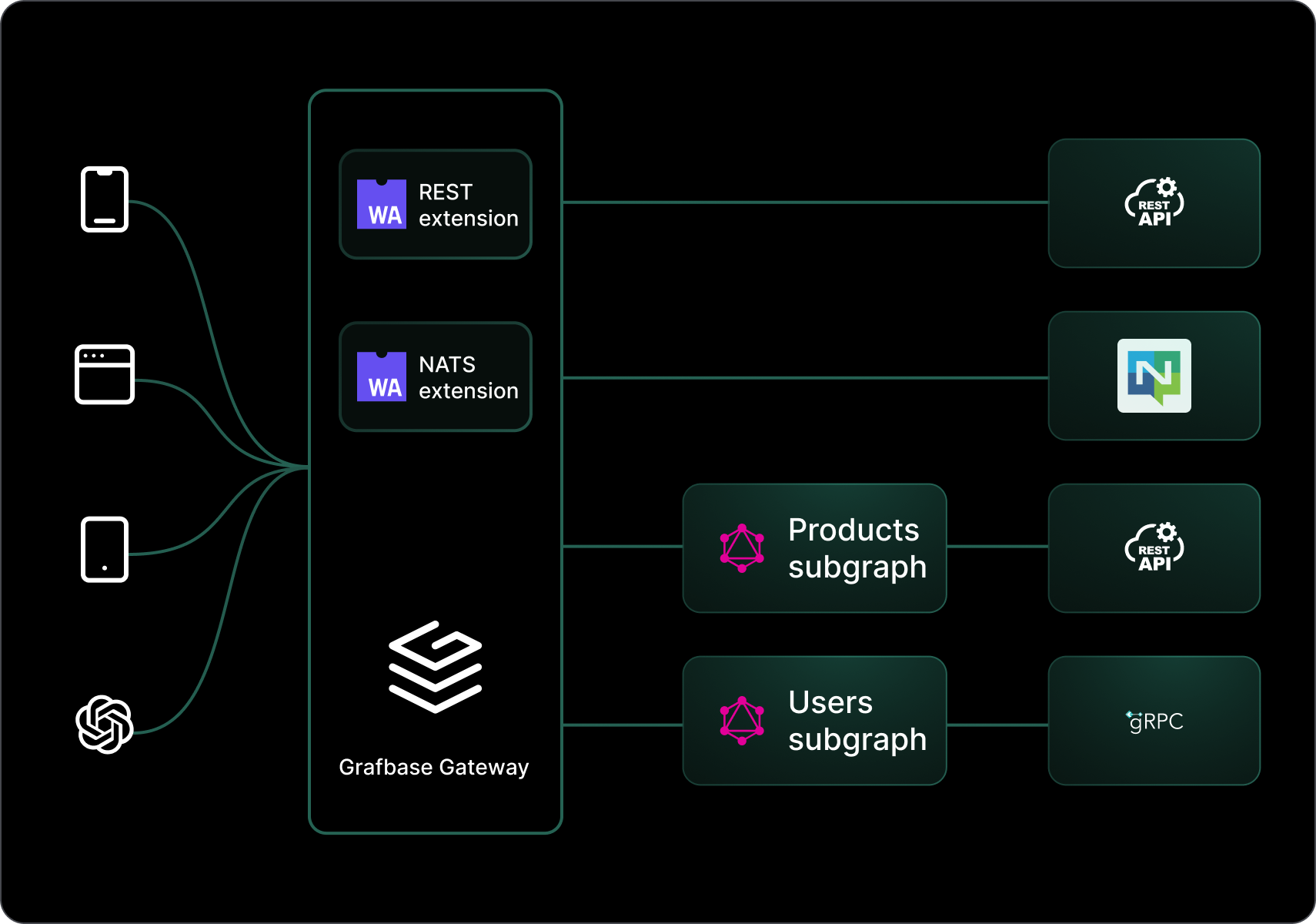

Grafbase is the easiest way to manage large scale federated graphs and is the fastest GraphQL Gateway on the market.

Compose your unified API from multiple data sources in just a few minutes with the Grafbase CLI.

## Get Started

Start building your federated graph in a few easy steps.

## Security

- [Trusted Documents](/docs/gateway/security/trusted-documents) let you define a set of trusted documents that can be used in your federated graph.

- [Rate Limiting](/docs/gateway/security/rate-limiting) helps you protect your federated graph from abuse.

- [Operation Limits](/docs/gateway/security/operation-limits) let you set limits on the number of operations that can be executed on your federated graph.

- [Message Signatures](/docs/gateway/security/message-signatures) let you sign messages to ensure their authenticity.

- [Authentication](/docs/gateway/security/authentication) restricts access to the gateway.

- [Authorization](/docs/gateway/security/authorization) restricts access to certain fields, objects, and more for a given operation.

- [Access Logs](/docs/gateway/security/access-logs) let you log access requests to your federated graph with custom hooks.

- [Access Tokens](/docs/gateway/security/access-tokens) let you manage access tokens to manage your federated graph.

## Performance

- [Automatic Persisted Queries](/docs/gateway/performance/automatic-persisted-queries) let you cache queries to improve performance.

- [Entity Caching](/docs/gateway/performance/entity-caching) lets you cache resolved entities to improve performance.

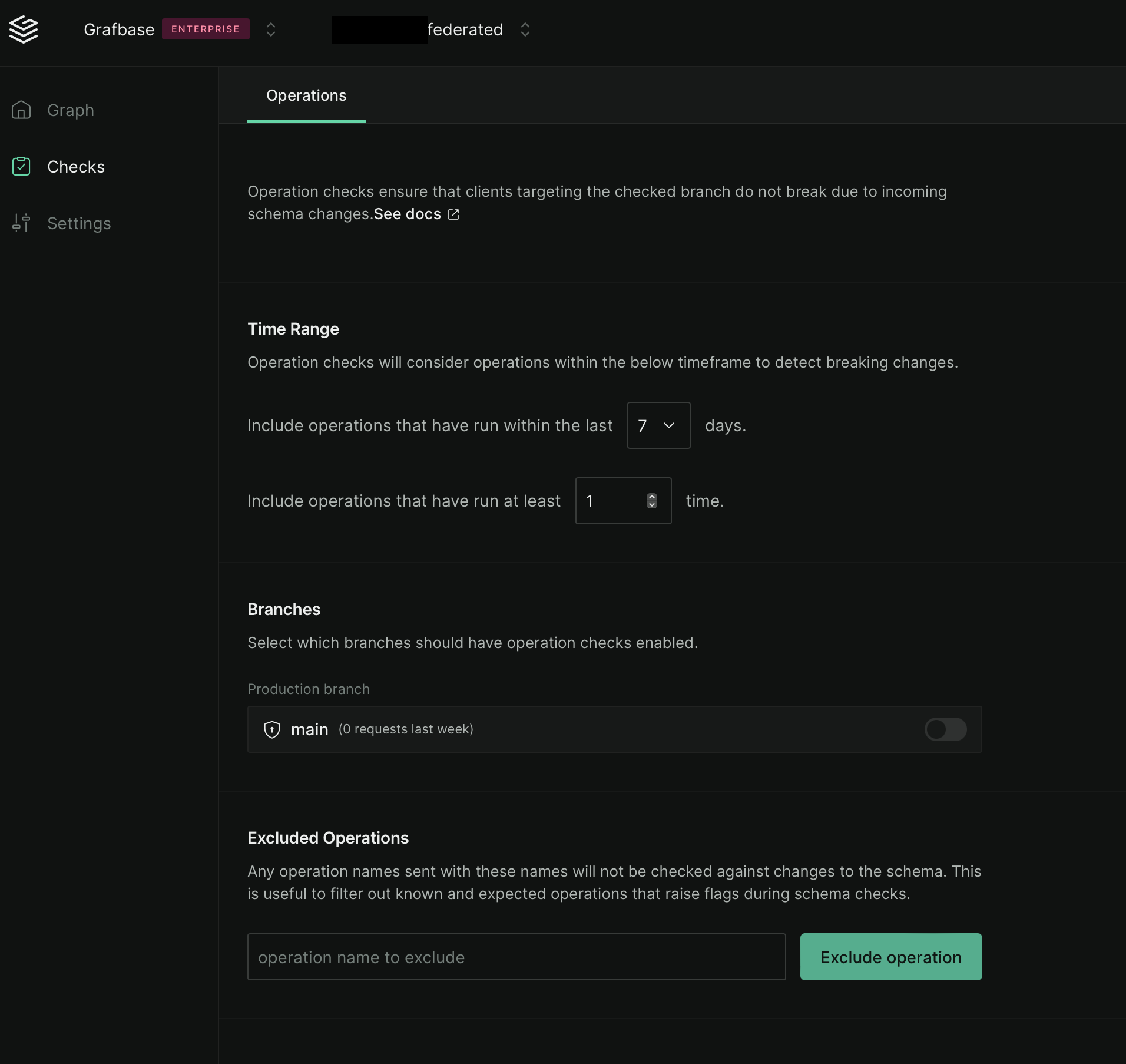

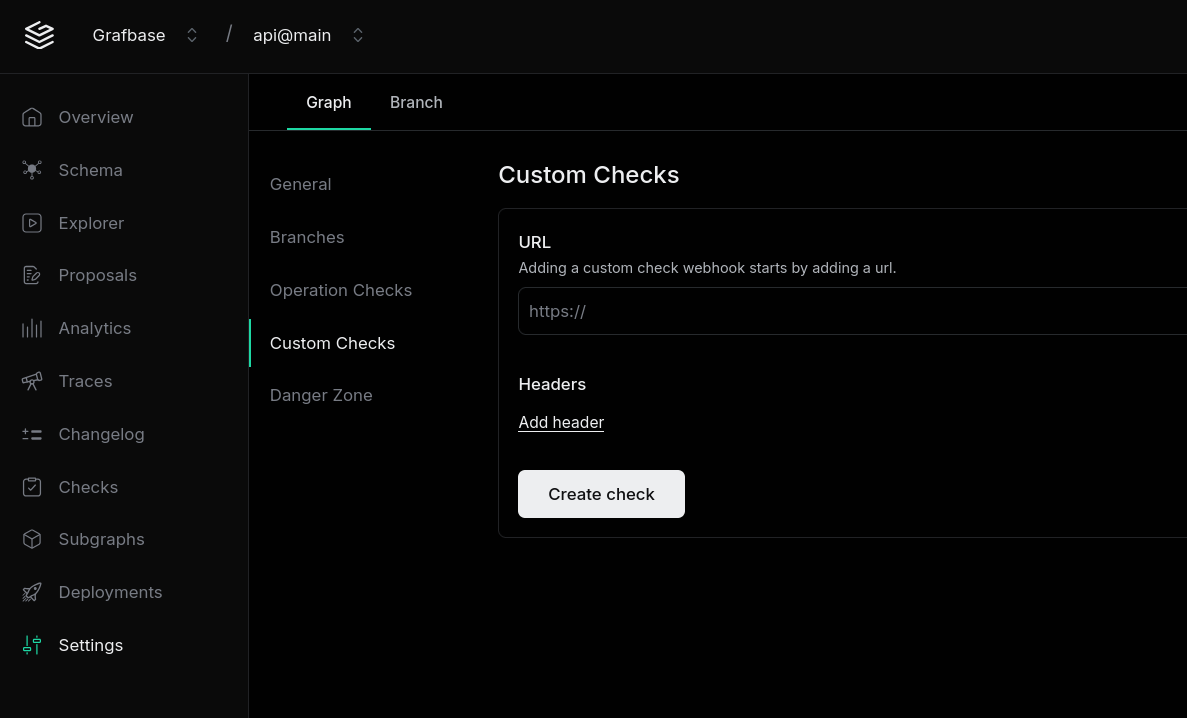

## Federation

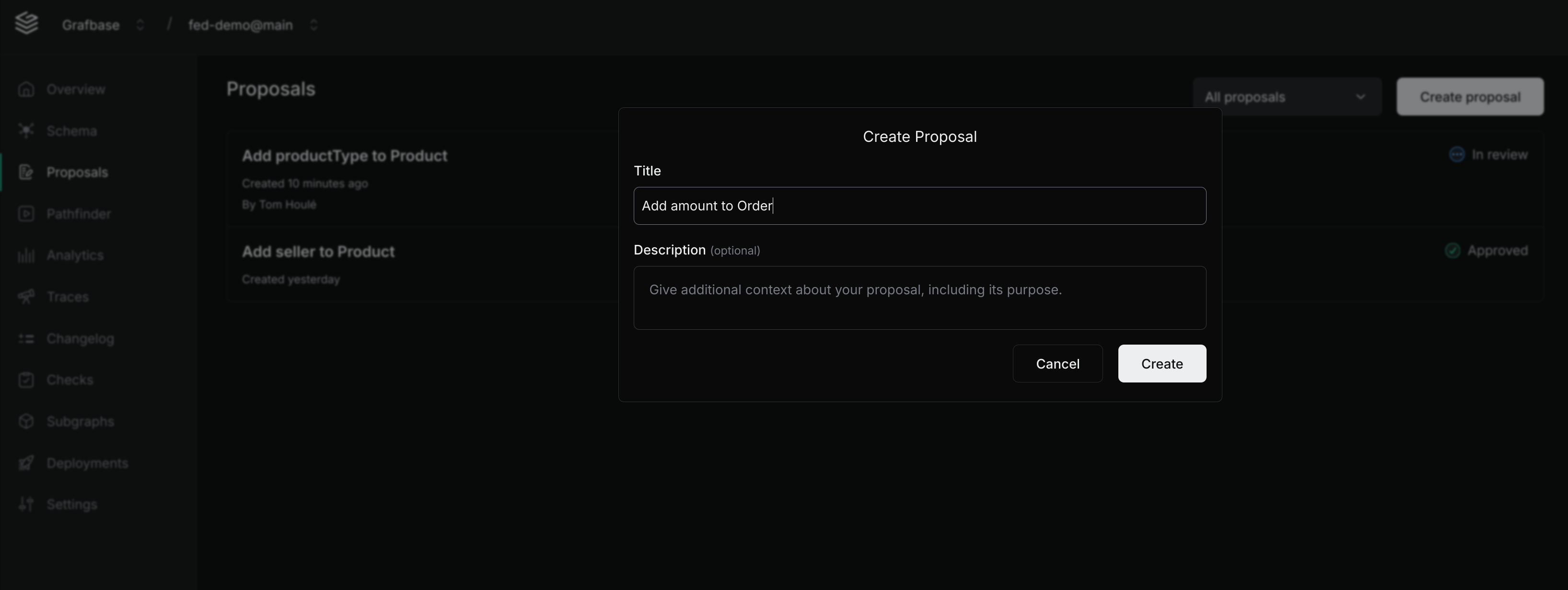

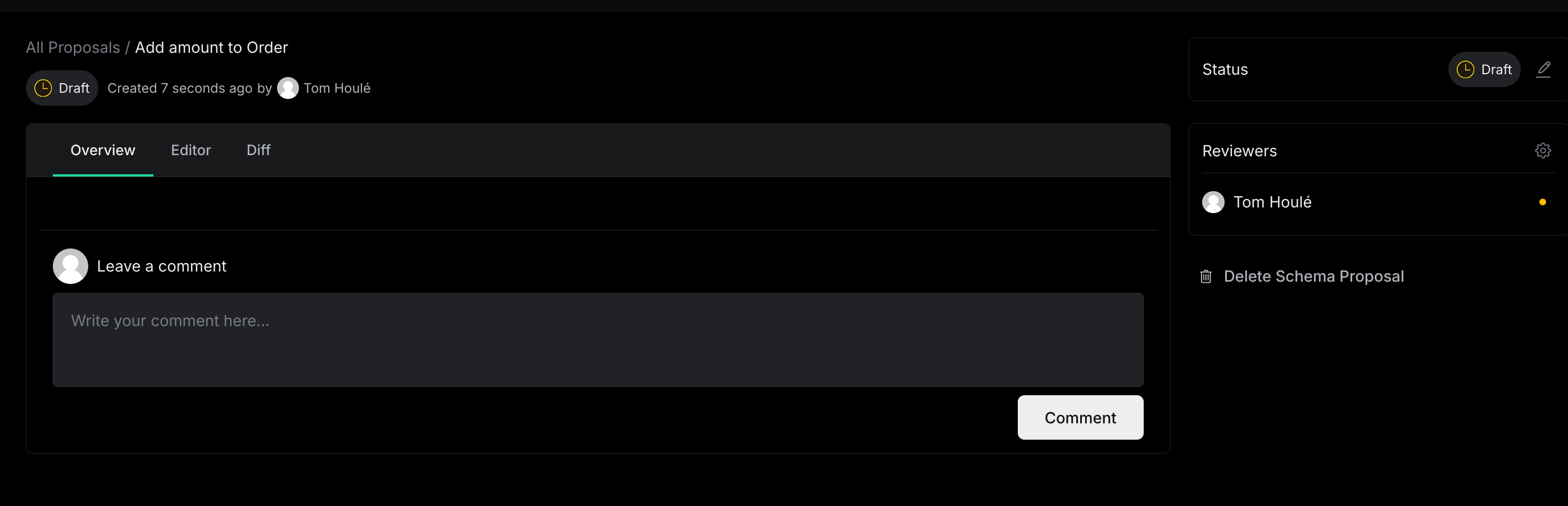

- [Schema Registry](/docs/platform/schema-registry) lets you compose subgraphs into a single federated graph.

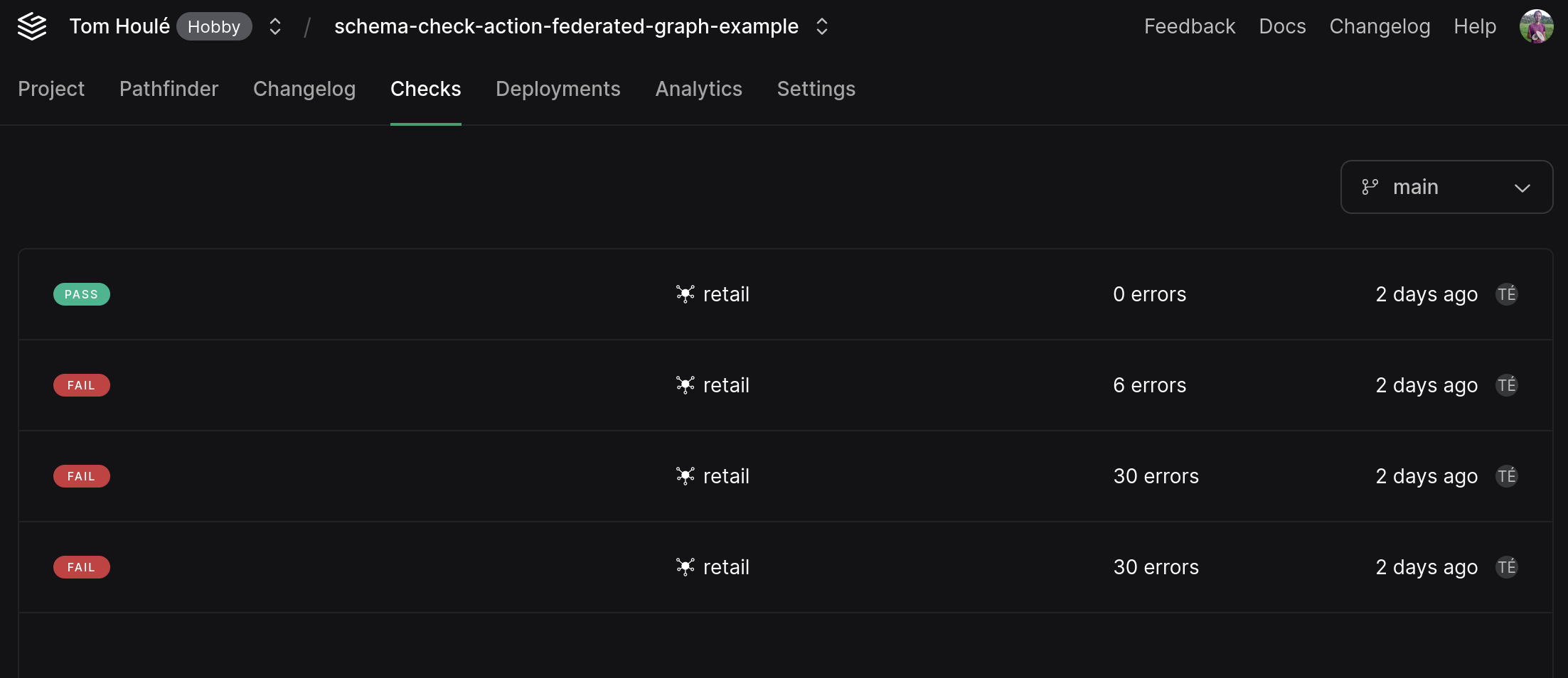

- [Schema Checks](/docs/platform/schema-checks) let you validate your subgraph against the federated graph schema.

## Observability

The Grafbase Gateway provides [logs](/docs/gateway/observability#logs), [metrics](/docs/gateway/observability#metrics), and [traces](/docs/gateway/observability#traces) to help you monitor and debug your federated graph. You can also store custom access log messages using the [Access Logs](/docs/gateway/security/access-logs) feature.

## Grafbase API

The [Grafbase Dashboard](/dashboard) is powered by the [Grafbase API](/docs/platform/api). This same API is available for users to manage their account and projects programmatically.

---

# Gateway - Installation

To install the Grafbase Gateway, run the following command:

```bash

curl -fsSL https://grafbase.com/downloads/gateway | bash

```

## Hybrid operation

In hybrid mode, the gateway fetches the current federated graph from the Grafbase platform. Create a federated graph in the Grafbase API, publish the subgraphs, and the gateway will always have the current graph running.

Start the gateway in hybrid mode with the graph reference and an organization access token:

```bash

GRAFBASE_ACCESS_TOKEN=token ./grafbase-gateway \

--config grafbase.toml \

--graph-ref graph@branch

```

`graph-ref` points to a graph created in the Grafbase API and its branch. If the branch is empty, the gateway uses the production branch by default.

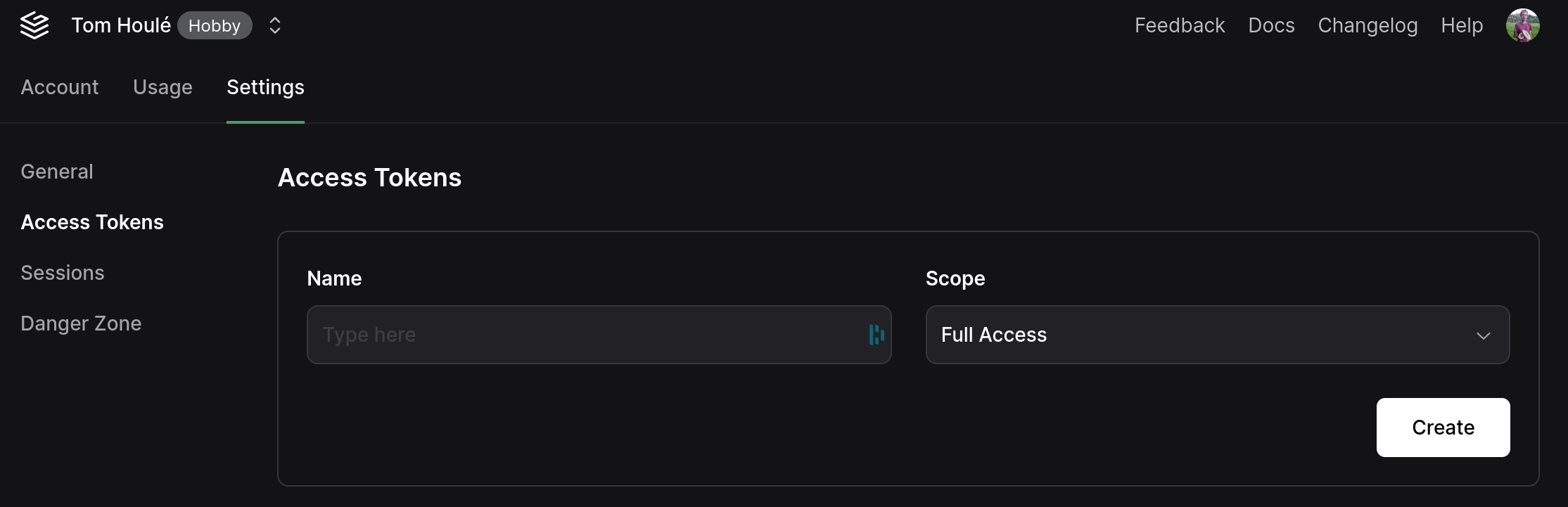

Create the organization access token in the account settings under "Access Tokens" and ensure it has permission to read the graph.

The gateway polls for graph changes every ten seconds.

## Air-gapped operation

In air-gapped mode, the gateway never calls the Grafbase API. You must provide the federated graph SDL as a file.

Start the gateway in self-hosted mode:

```bash

./grafbase-gateway \

--config /path/to/grafbase.toml \

--schema /path/to/federated-schema.graphql \

--listen-address 127.0.0.1:4000

```

Every five seconds, the gateway checks for changes in the schema file and initializes itself with the modified contents if it detects any changes.

## Configuration

The Grafbase Gateway reads its configuration from a TOML file. Read the configuration reference for more information.

---

# Gateway - Deployment - Docker

The Grafbase Gateway is published as a Docker image to GitHub Container Registry.

Example `compose.yaml`:

```yaml

services:

grafbase:

image: ghcr.io/grafbase/gateway:latest

restart: always

volumes:

- ./grafbase.toml:/etc/grafbase.toml

environment:

GRAFBASE_GRAPH_REF: 'graph-ref@branch'

GRAFBASE_ACCESS_TOKEN: 'ACCESS_TOKEN_HERE'

ports:

- '5000:5000'

```

The above `compose.yaml` file will start the Grafbase Gateway with the latest version of the image, bind the configuration file `grafbase.toml` to the container, and set the environment variables `GRAFBASE_GRAPH_REF` and `GRAFBASE_ACCESS_TOKEN` to the desired graph reference and access token, respectively. The configuration file `grafbase.toml` should be present in the same directory as the `compose.yaml` file.

---

# Gateway - Deployment - Kubernetes

Deploy the Grafbase Gateway through different options that fit diverse infrastructure needs. Choose from a [multi-platform binary](https://github.com/grafbase/grafbase/releases?q=gateway) for direct installation on various operating systems, or use a [Docker image](https://github.com/grafbase/grafbase/pkgs/container/gateway) that integrates into containerized environments like Kubernetes. This flexibility lets teams deploy the gateway in ways that best fit their workflow on local machines, cloud platforms, or hybrid infrastructures.

## Kubernetes

Kubernetes offers popular container orchestration with wide adoption for its scalability, reliability, and powerful ecosystem. This ecosystem includes tools like Helm, which simplifies the deployment and management of Kubernetes workloads through a templated, versioned, and reusable configuration framework. Use Helm to package applications into charts and streamline complex workload deployments consistently across environments.

### Gateway Helm Chart

Grafbase provides a Helm chart to simplify gateway deployment. The GitHub Container Registry hosts it with [Open Container Initiative (OCI)](https://helm.sh/docs/topics/registries/) compliance. Find the chart and versions at:

```bash

https://ghcr.io/grafbase/helm-charts/gateway

```

## Deploying

The chart includes default installation configuration for quick gateway setup with minimal configuration. While functional and easy to start with, tune this setup to accommodate real use cases.

Follow these steps to install the default configuration and customize settings for:

1. Number of replicas

2. Auto-scaling

3. Compute resources

4. External federated schema

5. External configuration

### Setup

Complete these prerequisites before deploying:

1. _Kubernetes Cluster:_ Get access to a Kubernetes cluster. Set up a local cluster like [kind](https://kind.sigs.k8s.io/) if needed.

2. _helm:_ Install Helm. Get started [here](https://helm.sh/docs/intro/quickstart/).

3. _kubectl:_ Install kubectl and point it to your cluster. Get started [here](https://kubernetes.io/docs/tasks/tools/#kubectl).

### Basic deployment

Start running the gateway with:

```bash

helm install test oci://ghcr.io/grafbase/helm-charts/gateway --version

```

Verify gateway operation:

```bash

kubectl get pods

```

Look for a running pod named `test-gateway`.

### Customize deployment

Use a Helm [values file](https://helm.sh/docs/chart_template_guide/values_files/) to customize your deployment:

```yaml

# 1. number of desired replicas running

replicaCount: 2

# 2. auto-scaling behaviour

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 10

targetCPUUtilizationPercentage: 70

targetMemoryUtilizationPercentage: 70

# 3. compute resources

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 1

memory: 1Gi

# 4. and 5. External schema and configuration from cluster configmaps

gateway:

externalConfig: true

externalSchema: true

args:

- --config

- /etc/grafbase/config/config.toml

- --schema

- /etc/grafbase/schema/schema.sdl

volumes:

- name: configuration

configMap:

name: grafbase-gateway-configuration

- name: schema

configMap:

name: grafbase-gateway-schema

volumeMounts:

- name: configuration

mountPath: /etc/grafbase/config

- name: schema

mountPath: /etc/grafbase/schema

```

This configuration:

1. Maintains 2 gateway replicas

2. Sets auto-scaling between 2 and 10 instances, scaling up at 70% CPU or memory usage

3. Allocates 1-2 CPU and 1-2GB memory per replica

4. Uses cluster configmaps for gateway configuration and federated schema, mounting them in specified paths

View all customizable values with:

```bash

helm show values oci://ghcr.io/grafbase/helm-charts/gateway --version

```

To apply customizations:

1. Save your settings to a file

2. Run:

```

helm upgrade test oci://ghcr.io/grafbase/helm-charts/gateway --version -f custom-values.yaml

```

Verify the deployment:

```bash

helm list

kubectl get pods

```

---

# Gateway - Deployment - Lambda

Deploy the Grafbase Gateway to the AWS Lambda platform as a serverless function. We provide a separate build targeting the Lambda platform for each gateway release. This has differences from running the gateway binary in a normal server environment. Select the correct builds for Lambda - normal builds won't work in AWS Lambda.

## Lambda Size Selection

The Grafbase Lambda Gateway uses minimal memory: common usage stays below hundred megabytes. The Lambda memory setting affects how much CPU AWS allocates for the function, so choosing a higher base memory amount can improve cold start times and general performance, even when the Gateway doesn't need the memory.

## Lambda Binary Selection

The [gateway release](https://github.com/grafbase/grafbase/releases) includes two Lambda-targeted binaries:

- `grafbase-gateway-lambda-aarch64-unknown-linux-musl` for Amazon Graviton platform, targeting 64-bit ARM architecture.

- `grafbase-gateway-lambda-x86_64-unknown-linux-musl` for 64-bit x86 platform.

The binaries won't work if you deploy them to the wrong CPU architecture. The aarch64 version is smaller, so using Graviton (when available in your region) can speed up cold starts due to the smaller size.

Lambda binaries work only in AWS Lambda or a Lambda-emulating docker image. These binaries optimize for size over runtime speed to minimize cold start time.

## Set up Lambda for the Grafbase Lambda Gateway

Configure the Lambda function as needed. Control Gateway execution settings through these environment variables:

- `GRAFBASE_CONFIG_PATH` sets the Grafbase configuration file path. Default: `./grafbase.toml`, looking for configuration in the Lambda root directory.

- `GRAFBASE_SCHEMA_PATH` sets the federated schema path. Default: `./federated.graphql`, looking in the Lambda root directory.

- `GRAFBASE_LOG` sets log level. Values: `error`, `warn`, `info`, `debug`, `trace` or `off`. Default: `info`.

- `GRAFBASE_LOG_STYLE` sets log message style. Values: `text` or `json`. Default: `text`.

## Deployment

Package the Grafbase Lambda Gateway with the gateway configuration and federated graph. Unlike a full server, Lambda functions freeze without traffic, preventing background graph updates. After publishing a new subgraph:

1. [Download](https://github.com/grafbase/grafbase/releases) your preferred Grafbase Lambda Gateway version

2. Rename the binary to `bootstrap`

3. Copy the configuration file to the same directory

4. Get the latest federated graph from the Grafbase API

Include these files in your deployment:

```bash

.

├── bootstrap

├── grafbase.toml

└── federated.graphql

```

Deploy following the [AWS documentation](https://docs.aws.amazon.com/lambda/latest/dg/gettingstarted-package.html).

## Configuration differences

Configure the Grafbase Lambda Gateway with the same configuration file as the binary, with these changes:

- AWS defines listen address and port - network settings don't apply

- AWS handles transport layer security - TLS settings in Grafbase configuration don't apply

- OpenTelemetry settings work similarly, but batching doesn't affect Lambda

- Set CORS from Gateway configuration or Lambda settings

## OpenTelemetry

We recommend using [AWS X-Ray](https://aws.amazon.com/xray/) for OpenTelemetry tracing in Lambda. The Grafbase Lambda Gateway propagates span IDs in X-Ray format and connects Lambda traces to Gateway traces.

Because functions sleep without traffic, you must flush traces before request responses. This increases response times. Run an OLPC collector in the same datacenter as your Lambda function. Install the [AWS distro for OpenTelemetry Collector](https://aws-otel.github.io/docs/setup/ec2) in Amazon Elastic Computer Cloud. Place the EC2 instance in the same region and virtual private network for best performance.

Configure the Grafbase Lambda Gateway to send traces to your functional OpenTelemetry collector:

```toml

[telemetry]

service_name = "my-federated-graph"

[telemetry.tracing]

enabled = true

sampling = 1

[telemetry.tracing.propagation]

aws_xray = true

[telemetry.tracing.exporters.otlp]

enabled = true

endpoint = "http://:4317"

protocol = "grpc"

timeout = 5

```

---

# Gateway - Extensions

Extensions are a powerful feature of the Grafbase Gateway that lets you extend its functionality with custom code. You write extensions in Rust and compile them into a WebAssembly module that the [Grafbase Gateway](/docs/gateway/installation) loads. Extensions implement custom authentication, authorization, and resolving logic, and integrate with external services and APIs.

Read more about [configuring the extensions in gateway](/docs/gateway/configuration/extensions), and [a guide on implementing one](/guides/implementing-a-gateway-resolver-extension).

## Extensions Architecture

An extension is a WebAssembly module with a JSON manifest. You add them to your gateway installation through configuration, and the gateway loads them automatically when it starts.

Two types of extensions exist: resolver extensions and authentication extensions. Resolver extensions extend the functionality of the gateway's resolvers, while authentication extensions extend the functionality of the gateway's authentication system.

We mainly support Rust to implement extensions. Grafbase provides a [Rust SDK](https://docs.rs/grafbase-sdk/latest/grafbase_sdk/) that makes implementing extensions easy. The SDK provides traits that you implement to extend the functionality of the gateway's resolvers and authentication system.

## Extension Manifest

The extension manifest is a JSON file that describes the extension's metadata and configuration. The Grafbase Gateway uses it to load and configure the extension.

The manifest file must contain the following fields:

- `name`: The name of the extension.

- `version`: The version of the extension.

- `kind`: The type of the extension (`resolver` or `authentication`).

- `sdk_version`: The version of the Grafbase SDK used to implement the extension.

- `minimum_gateway_version`: The minimum version of the Grafbase Gateway required to run the extension.

- `sdl`: The GraphQL directives and types the extension provides.

This file is called `manifest.json` in the extension's root directory.

## Extension Implementation

The extension logic compiles into a WebAssembly component and implements the [WASI Preview 2](https://github.com/WebAssembly/WASI/blob/main/wasip2/README.md) standard. This gives WebAssembly components more tooling to perform previously impossible tasks. These include network access with TCP or UDP, file system access, environment variable access and more.

An extension configuration defines what features the specific extension can access in its sandbox. You configure per-extension options in the gateway configuration to control what the extension can and cannot access.

The [Grafbase Rust SDK](https://docs.rs/grafbase-sdk/latest/grafbase_sdk/) provides the traits for implementing an extension, and the [Grafbase CLI](/docs/cli/installation) provides the commands for building and deploying extensions.

## Vision

We believe the Grafbase Gateway should serve as a platform to combine multiple data sources into a single GraphQL API. Extensions enable us to provide faster implementation of connections that were not previously feasible in the gateway itself. Our simple development tooling makes it easier for you to implement such connections.

For example, if your company uses a service that requires a custom protocol, you can implement an extension to connect to that service.

Although we license the Grafbase Gateway under the Mozilla Public License 2.0, we do not expect you to follow the same licensing with extensions. You can either submit a pull request with your extension code to make it available to the community, or keep it private for internal use.

---

- [v1.0](/docs/gateway/extensions/specs/grafbase-spec/v1.0)

---

# Gateway - Extensions - Specs - Grafbase directives & types for extensions - v1.0

This document defines the directives and types that have special treatment in the Grafbase gateway. They are meant to be used as building blocks for extension directives. The gateway will automatically detect them and inject the right data in their stead.

Here after is the full definition:

```graphql

"""

String specifying a selection set over the arguments which should be provided at runtime.

"""

scalar InputValueSet

"""

String specifying a selection set over the response data which should be provided at runtime.

"""

scalar FieldSet

# Template family

"String template rendered at runtime with URL escaping."

scalar UrlTemplate

"String template rendered at runtime with JSON escaping."

scalar JsonTemplate

```

Import me with:

```graphql

extend schema

@link(url: "https://specs.grafbase.com/grafbase", import: ["FieldSet"])

```

## InputValueSet

```graphql

"""

String specifying a selection set over the arguments which should be provided at runtime.

"""

scalar InputValueSet

```

The `InputValueSet` scalar is used to inject arguments into a directive. It's a `String` that defines a selection set such as `"ids filter { age }"` on the field arguments with a special case `"*"` that will inject all the arguments as shown in the following example:

```graphql

# Extension SDL

directive @myDirective(input: InputValueSet) on FIELD_DEFINITION

# ---

# Subgraph SDL

type Query {

# Receives all arguments

users(ids: [ID!], filter: Filter): [User!] @myDirective(input: "*")

# Which is equivalent to the following

users(ids: [ID!], filter: Filter): [User!] @myDirective(input: "ids filter")

# Fine-grained selection

users(ids: [ID!], filter: Filter): [User!]

@myDirective(input: "filter { age }")

}

input Filter {

name: String

age: Int

}

type User {

id: ID!

}

```

Contrary to field selection sets used in operations, there is a relaxed rule for leaves: one can select `"filter"` without specifying any sub-selection despite being an input object. The whole `Filter` input object will be provided in that case.

`InputValueSet` can be used in any extension directive definitions, but outside of `FIELD_DEFINITION` location, any non-null `InputValueSet` value will raise an error:

```graphql

# Valid

type User @myDirective {

id: ID!

}

# Valid

type User @myDirective(input: null) {

id: ID!

}

# Invalid, will raise an error.

type User @myDirective(input: "*") {

id: ID!

}

```

## FieldSet

```graphql

"""

String specifying a selection set over the response data which should be provided at runtime.

"""

scalar FieldSet

```

The `FieldSet` scalar is used to inject response data into a directive. It's a `String` that defines a field selection set such as `"id name"` on the _current_ object or interface:

```graphql

# Extension SDL

directive @myDirective(fields: FieldSet) on FIELD_DEFINITION

# ---

# Subgraph SDL

type User {

id: ID!

name: String

pets(limit: Int!): [Pet!]

catLoverFriends: [User!]

@myDirective(fields: "id pets(limit: 10) { ... on Cat { id } }")

}

union Pet = Cat | Dog

type Dog {

id: ID!

}

type Cat {

id: ID!

}

```

Except named fragments, `FieldSet` accepts any valid field selection set with field arguments or inline fragments. The gateway will do its best to re-use existing operation fields if they match, but will request anything additional.

`FieldSet` can be used in any extension directive definitions, but outside of `FIELD_DEFINITION`, `OBJECT` and `INTERFACE` locations, any non-null `FieldSet` value will raise an error:

```graphql

# Valid

union Pet = Cat | Pet @myDirective

# Valid

union Pet = Cat | Pet @myDirective(fields: null)

# Invalid, will raise an error.

union Pet = Cat | Pet @myDirective(fields: "... on Cat { id }")

```

## Templates

```graphql

"String template rendered at runtime with URL escaping."

scalar UrlTemplate

"String template rendered at runtime with JSON escaping."

scalar JsonTemplate

```

Templates are rendered by the gateway before being sent over to the extension. We support two variants of templates:

- `UrlTemplate`: for URLs, values will be URL-encoded. Complex values such as objects or lists will be first serialized to JSON and then URL-encoded.

- `JsonTemplate`: for JSON, values will be rendered as their serialized JSON value.

The following variables are accessible in the template:

- `args`: the arguments of the field

We use a subset of [Mustache](https://mustache.github.io/) template syntax:

- values: `{{val}}`

- nested fields: `{{object.field}}`

- current object: `{{.}}`

- nested block such `{{#object}} {{field}} {{/object}}` scope the content to the parent value. So `field` refers to `object.field`. Furthermore if used on a list such as `{{#objects}} {{field}} {{/objects}}` will render `field` for all the objects in the list.

- We have custom blocks `-last` and `-first` which only render for the last element and the first element of a list respectively. But more importantly, we support their opposite: `^-last` and `^-first` rendering for all but the last and but the first respectively, which most notably allows building JSON lists:

```mustache

{{#objects}} {{field}} {{^-last}},{{/-last}} {{/objects}}

```

Here after are a few examples using `UrlTemplate` and `JsonTemplate`:

```graphql

# Extension SDL

directive @myDirective(url: UrlTemplate, json: JsonTemplate) on FIELD_DEFINITION

# ---

# Subgraph SDL

type Query {

# user(id: "1") -> "/users/1"

user(id: ID!): User @myDirective(url: "/users/{{ args.id }}")

# users(ids: ["1", "2"]) -> "/users?ids=%5B%221%22%2C%222%22%5D"

users(ids: [ID!]): [User!] @myDirective(url: "/users?ids={{ args.ids }}")

# users(ids: ["1", "2"]) -> "/users?ids=1,2"

users(ids: [ID!]): [User!]

@myDirective(

url: "/users?ids={{#args.ids}}{{.}}{{^-last}},{{/-last}}{{/args.ids}}"

)

# users(filter: { name: "Alice" }) -> `{"name":"Alice"}`

users(ids: [ID!], filter: Filter): [User!]

@myDirective(json: "{{ args.filter }}")

# users(filter: { name: "Alice" }) -> `"Alice"`

users(ids: [ID!], filter: Filter): [User!]

@myDirective(url: "{{ args.filter.name }}")

}

input Filter {

name: String

age: Int

}

type User {

id: ID!

}

```

---

- [v1.0](/docs/gateway/extensions/specs/composite-schemas-spec/v1.0)

---

# Gateway - Extensions - Specs - Composite schemas with Grafbase - v1.0

The GraphQL Composite Schemas Spec is being actively worked upon on [GitHub](https://github.com/graphql/composite-schemas-spec). Most importantly for readers are:

- [Source Schema directives](https://github.com/graphql/composite-schemas-spec/blob/main/spec/Section%202%20--%20Source%20Schema.md) defining the directives that may be used by subgraphs such as `@lookup`.

- [FieldSelectionMap specification](https://github.com/graphql/composite-schemas-spec/blob/main/spec/Appendix%20A%20--%20Field%20Selection.md) used in `@is` and `@require`.

The Grafbase Gateway partially implements the specification with the goal of implementing it fully over time. In addition, we also extend the specification to better fit the use case of non-GraphQL data sources.

The current document describe both the current implemented parts of the specification and any additions we made.

# Directives

Directives can be imported with `@link` similarly to Apollo Federation's directives:

```graphql

extend schema

@link(

url: "https://specs.grafbase.com/composite-schemas/v1"

import: ["@lookup", "@key"]

)

```

In all examples within this document, directives will be imported with this `@link` directive if not specified explicitly.

It is not possible to use both Apollo Federation and Composite Schemas together in the same subgraphs. But a super graph can be composed of subgraphs used either.

## Not implemented

- `@internal`

- `@require` (coming soon)

## Common directives with Apollo Federation

`@external`, `@inaccessible`, `@shareable`, `@provides` and `@override` are implemented in the same way for both Apollo Federation and Composite Schemas.

`@key` in Composite Schemas is treated as a non-resolvable Apollo Federation `@key`. So `@key(fields: "id")` is implicitly `@key(fields: "id", resolvable: false)`.

Resolvable in that context means that the subgraph can provide the entity through Apollo Federation's custom `_entities` field. So `@key` in Composite Schemas only

defines the key fields, without specifying how the entities can be retrieved. A subgraph only defining the following does not provide any means for the supergraph to

retrieve a `Post` entity. `_entities` and `_service` are not assumed to exist. See `@lookup` directive to provide entities.

```graphql

extend schema

@link(

url: "https://specs.grafbase.com/composite-schemas/v1"

import: ["@key"]

)

type Post @key(fields: "id") {

id: ID!

}

```

## @lookup

```graphql

directive @lookup on FIELD_DEFINITION

```

`@lookup` defines how entities can be accessed by the supergraph. In the following example `@lookup` would automatically be used to a `Post` entity.

```graphql

type Query {

post(id: ID!): Post! @lookup

}

type Post @key(fields: "id") {

id: ID!

}

```

Today the Grafbase Gateway only supports the batch variant, which doesn't exist in the Composite Schemas spec, for extensions like [Postgres](/extensions/postgres):

```graphql

type Query {

posts(ids: [ID!]): [Post!] @lookup

}

type Post @key(fields: "id") {

id: ID!

}

```

`@lookup` will automatically detect the right argument to inject for single and composite keys as long as there isn't any ambiguity. Only one `@lookup` can exists in a subgraph for any given `@key`.

Support for single lookup for any resolver extensions/GraphQL subgraphs and explicit mapping with `@is` is coming soon.

## @derive

```graphql

directive @derive on FIELD_DEFINITION

```

The `@derive` directive creates a virtual entity field when the original data only exposes ids.

It doesn't exist in the Composite Schemas Spec, we added it to simplify the integration of non-Graphql data sources such as REST or gRPC.

```graphql

type Query {

posts: [Post!]!

}

type Post {

authorId: ID!

# This field is not provided by the subgraph

author: User! @derive

}

type User @key(fields: "id") {

id: ID!

}

```

The `author` field is resolved by the supergraph, derived from the `authorId` field. This simple adjustment makes it possible to query fields from other subgraphs:

```graphql

{

posts {

author {

id

name

}

}

}

```

`@derive` will, similarly to `@lookup`, automatically detect the relevant fields based on their name and type given the defined `@key`.

A derived field _must_ match at least one `@key`.

It may provide more fields or multiple keys, and you can also hide the real subgraphs fields from your final API schema with `@inaccessible`:

```graphql

type Post {

authorId: ID! @inaccessible

# This field is not provided by the subgraph

author: User! @derive

}

```

`@derive` supports multiple use cases and can be explicitly specified with the help of the `@is` directive:

- Single key:

```graphql

type Post {

authorId: ID!

author: User! @derive

# or

author: User! @derive @is(field: "{ id: authorId }")

}

type User @key(fields: "id") {

id: ID!

}

```

- Composite key:

```graphql

type Post {

authorTenantId: ID!

authorEmail: String!

author: User! @derive

# or

author: User!

@derive

@is(field: "{ tenantId: authorTenantId email: authorEmail }")

}

type User @key(fields: "tenantId email") {

tenantId: ID!

email: String!

}

```

- Single key list

```graphql

type Post {

commentIds: [ID!]

comments: [Comment!]! @derive

# or

comments: [Comment!]! @derive @is(field: "commentIds[{ id: . }]")

}

type Comment @key(fields: "id") {

id: ID!

}

```

- Composite key list

```graphql

type Post {

reviewersTenantIdAndEmail: [TenantIdAndEmail!]!

reviewers: [User!]! @derive

# or

reviewers: [User!]!

@derive

@is(field: "reviewersTenantIdAndEmail[{ tenantId email }]")

}

type TenantIdAndEmail {

tenantId: ID!

email: String!

}

type User @key(fields: "tenantId email") {

tenantId: ID!

email: String!

}

```

The only limit to `@derive` today is that nested fields are not supported.

## @is

See `@lookup` and `@derive` for more information.

---

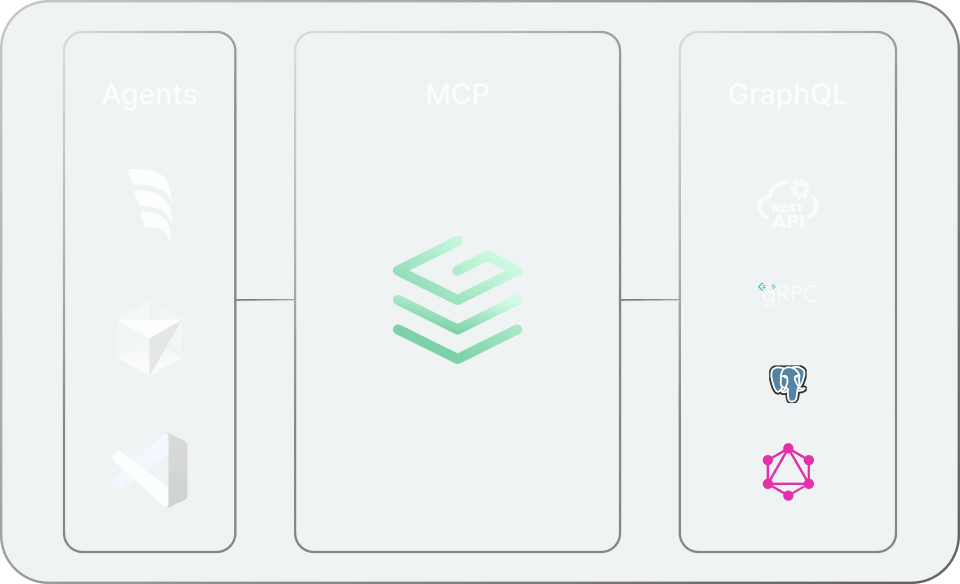

# Gateway - MCP

MCP is a new protocol, launched in November 2024 by [Anthropic](https://anthropic.com), designed to make structured data explorable and actionable via natural language. Grafbase offers MCP support out of the box - removing the need to stand up your own standalone MCP server, configure authentication, or fine-tune access control.

## Get started with Cursor

The Grafbase MCP server can be started with the [Grafbase CLI](/docs/cli/installation) by running:

```bash

npx grafbase mcp

```

The MCP server listens to requests at `http://127.0.0.1:5000/mcp` by default. To add it to Cursor, create a `.cursor/mcp.json` file in your project with the following:

```json

{

"mcpServers": {

"my-graphql-api": {

"url": "http://127.0.0.1:5000/mcp"

}

}

}

```

## Setting up MCP in the Grafbase Gateway

The [Grafbase Gateway](/docs/gateway/installation) can be configured to expose a MCP endpoint with the following `grafbase.toml` configuration:

```toml

[mcp]

enabled = true # defaults to false

# Path at which to expose the MCP service

path = "/mcp"

# Whether mutations can be executed

execute_mutations = false

---

# Gateway - Observability

The Grafbase Gateway lets you monitor gateway operations and errors through logs, traces, and metrics. When you use the gateway with a Grafbase access token, the Grafbase dashboard receives gateway operation analytics automatically.

The gateway also supports sending monitoring data to endpoints that implement [OpenTelemetry](https://opentelemetry.io/) protocols. You can combine gateway traces with other services in your platform, and access more metrics beyond what the Grafbase dashboard currently shows.

## Logs

The Grafbase Gateway provides logs for monitoring gateway operations and errors. By default, it outputs logs to standard output. Additionally, the gateway can send monitoring data to an endpoint that implements the [OpenTelemetry](https://opentelemetry.io/) protocols.

### Level of Produced Information

You can define the level of information by setting the log level command line argument:

```bash

--log

Set the logging level, this applies to all spans, logs and trace events.

Beware that *only* 'off', 'error', 'warn' and 'info' can be used safely in

production. More verbose levels, such as 'debug', will include sensitive

information like request variables, responses, etc.

Possible values are: 'off', 'error', 'warn', 'info', 'debug', 'trace' or a

custom string. In the last case, the string is passed on to

[`tracing_subscriber::EnvFilter`] as is and is only meant for debugging

purposes. No stability guarantee is made on the format.

[env: GRAFBASE_LOG=]

[default: info]

```

This setting affects both traces and logs. The default level is `info`. `debug` and `trace` will include sensitive details and should not be used in production.

The `error` or `off` levels affect all traces and spans at `info` level. If you want to silence all logs but still export them along with traces and metrics to an OpenTelemetry endpoint, direct standard output and standard error to `/dev/null`.

### System Logs

By default, the system outputs logs to standard output. Logs can appear in two different formats:

```bash

--log-style

Set the style of log output

[env: GRAFBASE_LOG_STYLE=]

[default: pretty]

Possible values:

- pretty: Pretty printed logs, used as the default in the terminal

- text: Standard text, used as the default when piping stdout to a file

- json: JSON objects

```

The default style is `pretty`, inside a terminal, which provides ANSI-colored text for terminal output and a human-friendly formatting. When piping to a file, `text` will be used instead.The `json` format delivers logs in JSON format, which can be useful if the logging platform supports structured data.

### Access Logs

The Grafbase Gateway can log access requests. Read more on the [access logs feature](/docs/gateway/security/access-logs) and configuring them in the [gateway configuration](/docs/gateway/configuration/gateway#access-logs).

### Logging into an OpenTelemetry Endpoint

The Grafbase Gateway can send logs to an OpenTelemetry endpoint. To enable this feature, either define the global telemetry endpoint or an endpoint for the logs exporter. Read more about the [OpenTelemetry configuration](/docs/gateway/configuration/telemetry#log-exporter).

## Traces

Grafbase Gateway monitors the request lifecycle by providing traces. Add a valid access token in the `GRAFBASE_ACCESS_TOKEN` environment variable to automatically send traces to the Grafbase Dashboard or Grafbase Enterprise platform.

The dashboard displays only traces from the Grafbase Gateway. Configure a different OpenTelemetry endpoint in the configuration file to send traces elsewhere. A third-party telemetry platform lets you [propagate traces](/docs/gateway/configuration/telemetry#propagation) from the gateway with other services in your platform. Traces provide information on the request lifecycle and send data to the OpenTelemetry endpoint from the `info` level.

Read more about the [telemetry configuration](/docs/gateway/configuration/telemetry) and [tracing spans and attributes](/docs/gateway/telemetry/tracing-attributes).

## Metrics

The Grafbase Gateway delivers metrics for requests and operations to an OpenTelemetry endpoint. Metrics include counters, histograms, and gauges at various points in the system.

To automatically send metrics to the Grafbase Dashboard or Grafbase Enterprise platform, add a valid access token in the `GRAFBASE_ACCESS_TOKEN` environment variable. [Configure an additional OpenTelemetry exporter](/docs/gateway/configuration/telemetry#metrics-exporter) in the configuration file to send metrics to other destinations.

The [metrics reference](/docs/gateway/telemetry/metrics-attributes) lists all metrics and their attributes.

---

# Gateway - Performance - Automatic Persisted Queries

Automatic Persisted Queries let you avoid sending the query string each time and help prepare the execution. By default, they are enabled in the Grafbase Gateway. You can [disable them from the configuration](/docs/gateway/configuration/automatic-persisted-queries).

First, associate a query with a unique identifier: the SHA-256 hash of the query string:

```text

# SHA-256

4ef8d269e7944ef2cd6554ecb3d73164546945cf935806933448905abec554e5

```

```graphql

query {

__typename

}

```

You can persist the query at any time. Send the following payload, and the system caches it for a day:

```json

{

"query": "query { __typename }",

"extensions": {

"persistedQuery": {

"version": 1,

"sha256Hash": "4ef8d269e7944ef2cd6554ecb3d73164546945cf935806933448905abec554e5"

}

}

}

```

The next time you execute the same query, omit the query field as follows:

```json

{

"extensions": {

"persistedQuery": {

"version": 1,

"sha256Hash": "4ef8d269e7944ef2cd6554ecb3d73164546945cf935806933448905abec554e5"

}

}

}

```

If Grafbase does not find the query, it returns the following error:

```json

{

"errors": [

{

"message": "Persisted query not found",

"extensions": {

"code": "PERSISTED_QUERY_NOT_FOUND"

}

}

]

}

```

In this case, send the request with the full query string.

## Using GET requests

Grafbase also supports executing GET HTTP requests. You must pass fields as query parameters, encoding their values in JSON format first. To execute a persisted query:

```bash

curl --get 'http://localhost:4000/graphql' \

--data-urlencode 'extensions={"persistedQuery":{"version":1,"sha256Hash":"4ef8d269e7944ef2cd6554ecb3d73164546945cf935806933448905abec554e5"}}'

```

To register a query:

```bash

curl --get 'http://localhost:4000/graphql' \

--data-urlencode 'query=query { __typename }' \

--data-urlencode 'extensions={"persistedQuery":{"version":1,"sha256Hash":"4ef8d269e7944ef2cd6554ecb3d73164546945cf935806933448905abec554e5"}}'

```

---

# Gateway - Performance - Entity Caching

Grafbase Gateway uses Entity Caching to cache requests to subgraphs. Enable entity caching globally in the [global entity cache config section](/docs/gateway/configuration/entity-cache). Every subgraph can define its own cache policies - learn more in the [per-subgraph entity cache config section](/docs/gateway/configuration/subgraph-configuration#entity-cache). The system protects user data by scoping cached data, and uses all headers to compute the scope.

Entity caching stores data in an in-memory cache by default. Configure Redis as your caching backend when you need to run and share cache across multiple gateways. Learn more about [configuring Redis for entity cache](/docs/gateway/configuration/entity-cache#using-redis-for-entity-caching).

TLS with Redis increases response times, and each request requires at least one call to the Redis server. Place the Redis server as close as possible to the gateway instances and avoid using TLS for the counters.

---

# Gateway - Performance - Compression

Grafbase Gateway supports compression for:

- the body of responses from the Gateway (using the standard `Accept-Encoding` header)

- the body of responses from subgraphs for requests issued by the Gateway (using the standard `Accept-Encoding` header)

All the common compression algorithms are supported: gzip, deflate, br (brotli) and zstd.

---

# Gateway - Security

Security is a critical aspect of any application. It is important to ensure that the application is secure and that the data is protected. This section will cover the security aspects of the application.

## Control the Operations with Trusted Documents

The application should control the operations that can be performed on the data. This can be done by using trusted documents that define the operations that can be performed on the data. The application should only allow the operations that are defined in the trusted documents.

Read more about [trusted documents](/docs/gateway/security/trusted-documents).

## Rate Limiting

Rate limiting is an important security measure that can help protect the application from abuse. It can help prevent malicious users from overwhelming the application with requests. Rate limiting can be implemented at various levels, such as the global operation level, or the per-subgraph level.

Read more about [rate limiting](/docs/gateway/security/rate-limiting).

## Operation Limits

Operation limits can help protect the application from abuse by limiting the cost of operations.

Read more about [operation limits](/docs/gateway/security/operation-limits).

## Authentication

Restrict access to your federated graph, authenticating users.

Read more about [authentication](/docs/gateway/security/authentication).

## Authorization

Restrict access to fields, objects, and more for a given operation.

Read more about [authorization](/docs/gateway/security/authorization).

## Access Logs

Access logs can help you monitor and track the activity on your federated graph. Grafbase Gateway provides a fully customizable logging system that allows you to log the requests and responses.

Read more about [access logs](/docs/gateway/security/access-logs).

## Access Tokens

Access tokens are used to authenticate users and grant them access to the federated graph. Access tokens can be used to control access to the federated graph and to protect the data.

Read more about [access logs](/docs/gateway/security/access-logs).

---

# Gateway - Security - Trusted Documents

GraphQL APIs provide clients with considerable flexibility to query any data they need. This flexibility represents one of GraphQL's major strengths, but it also introduces vulnerabilities. When any client can query any data, malicious or careless queries can create excessive load on the server. Trusted Documents solve this problem.

The concept has existed since the early days of GraphQL, using terms like _persisted queries_ or _persisted operations_. An API that uses Trusted Documents accepts only GraphQL documents (queries, operations) submitted (trusted) at development or deployment time. Instead of sending the whole document, clients send a more compact _document id_. This approach enhances security by rejecting malicious queries and improves performance by transmitting only the document id, similar to what occurs in [Automatic Persisted Queries](/docs/gateway/performance/automatic-persisted-queries).

## Overview

Adopting Trusted Documents places constraints primarily on API clients. To enforce trusted documents in a Grafbase API, you simply set a single option in `grafbase.toml` (see below).

We will begin by exploring the more complex aspects of adopting Trusted Documents and then explain how to enforce them.

## Trusting Documents: Upload a Manifest

The purpose of Trusted Documents is to accept only queries on an allow-list. Start by generating and communicating that list. We call the allow-list document a **manifest**, and it takes the form of a JSON file. Your GraphQL client setup of choice creates the manifest.

The two most common setups for generating a trusted documents manifest are [Relay Persisted Queries](https://relay.dev/docs/guides/persisted-queries/#local-persisted-queries) and Apollo Client operation manifests ([JS](https://www.apollographql.com/docs/react/api/link/persisted-queries/#1-generate-operation-manifests), [Kotlin](https://www.apollographql.com/docs/kotlin/advanced/persisted-queries/), [iOS](https://www.apollographql.com/docs/ios/fetching/persisted-queries/#2-generate-operation-manifest)). Grafbase natively supports both Relay and Apollo Client manifest formats. If you need support for another setup or manifest format, please [contact us](/contact).

After you create a manifest JSON file that includes the GraphQL documents your application needs and the associated document IDs, submit the manifest using the `grafbase` CLI:

```bash

grafbase trust my-account/my-graph@main --manifest manifest.json --client-name ios-client

```

Let's break down the arguments:

- `grafbase trust my-account/graph-name@main`: Like many other CLI commands, `trust` requires a graph reference in the format `/@`. Remember that you must include the branch name to avoid defaulting to the production branch, which can introduce security risks.

- `--manifest manifest.json`: Provide the file path to the JSON file generated by your client of choice.

- `--client-name`: Each client of an API using trusted documents must identify itself with a client name using the `x-grafbase-client-name` HTTP header. Read on for more details.

After you submit the manifest, the API trusts the GraphQL documents in the manifest, associating them with their corresponding document IDs. **The trust command applies to a single branch and a single client name**. To enforce trusted documents across multiple branches or clients, you must trust the relevant documents for each combination.

## Trusted Documents in the Client: Runtime Components

In the previous section, we uploaded the trusted document manifests. Now, our API knows which documents to expect. Our GraphQL client needs to change its requests to the API in two ways:

1. Send the `x-grafbase-client-name` header with the same name used when submitting the manifest with `grafbase trust`.

2. Send the trusted document IDs instead of the document body in GraphQL requests.

For example [in Relay](https://relay.dev/docs/guides/persisted-queries/#network-layer-changes):

```ts

function fetchQuery(operation, variables) {

return fetch('/graphql', {

method: 'POST',

headers: {

'content-type': 'application/json',

'x-grafbase-client-name': 'ios-app',

},

body: JSON.stringify({

// `doc_id` is also accepted.

documentId: operation.id, // NOTE: pass md5 hash to the server

// query: operation.text, // this is now obsolete because text is null

variables,

}),

}).then(response => {

return response.json()

})

}

```

or with [Apollo Client](https://www.apollographql.com/docs/react/api/link/persisted-queries/#persisted-queries-implementation):

```ts

import { ApolloClient, HttpLink, InMemoryCache } from '@apollo/client'

import { createPersistedQueryLink } from '@apollo/client/link/persisted-queries'

import { generatePersistedQueryIdsFromManifest } from '@apollo/persisted-query-lists'

const httpLink = new HttpLink({

uri: 'http://localhost:4000/graphql',

headers: {

'x-grafbase-client-name': 'ios-app',

},

})

const persistedQueryLink = createPersistedQueryLink(

generatePersistedQueryIdsFromManifest({

loadManifest: () => import('./path/to/persisted-query-manifest.json'),

}),

)

const client = new ApolloClient({

cache: new InMemoryCache(),

link: persistedQueriesLink.concat(httpLink),

})

```

## Enforcing Trusted Documents with the Self-hosted Grafbase Gateway

On the server side, the process is straightforward. You will find one relevant section in `grafbase.toml`:

```toml

[trusted_documents]

enabled = true

enforced = false

bypass_header_name = "my-header-name" # default null

bypass_header_value = "my-secret-is-{{ env.SECRET_HEADER_VALUE }}" # default null

```

See the [reference documentation](/docs/gateway/configuration/trusted-documents) for a list of all the options and their effects.

---

# Gateway - Security - Rate Limiting

The Grafbase Gateway offers ways to limit the number of requests per time window either globally or per subgraph. You can define the limit in memory per gateway instance or utilize a Redis backend to share the limit state with multiple gateway instances. Read more on [global rate limit configuration](/docs/gateway/configuration/gateway#rate-limit) and [per-subgraph rate limit configuration](/docs/gateway/configuration/subgraph-configuration#rate-limit).

## Using In-memory Rate Limiting

The default in-memory rate limiter uses the [generic cell rate algorithm](https://en.wikipedia.org/wiki/Generic_cell_rate_algorithm), which is a leaky bucket type scheduling algorithm. This method accurately limits sudden request bursts even before the current time window reaches its limit. It provides the fastest performance because the engine requires no network requests per GraphQL operation. When you restart the gateway with the in-memory rate limiter, the rate limit data starts empty.

## Using Redis for Rate Limiting

If you need to run multiple gateways and share the rate limit data with all of them, configure the gateway to use Redis as the rate limiter backend. Read more on [configuring Redis for rate limiting](/docs/gateway/configuration/gateway#using-redis-as-rate-limit-storage).

The Redis implementation uses an averaging fixed window rate limiting, which is different from the generic cell rate algorithm of the in-memory implementation. The Redis implementation generates two temporary keys to the database:

- `{key_prefix}:{subgraph:subgraph_name || global}:{current_time_bucket}`

- `{key_prefix}:{subgraph:subgraph_name || global}:{previous_time_bucket}`

The system fetches both values in a single Redis request, counts how far we are in the current time window, and calculates an averaged request count. The algorithm prevents spikes at the window border with an accuracy of a few percent.

Adding to the counter in the current time bucket happens off-thread, and the system deletes the buckets from the database after the time window ends.

The rate-limiting happens in a hot path, so the Redis server should be as close as possible to the gateway instances. Avoid using TLS for the counters to reduce the number of round trips to the Redis server.

---

# Gateway - Security - Operation Limits

One of the most common attacks malicious actors do to GraphQL APIs is sending complex and deeply nested queries to overload the server. Operation Limits allow you to protect your GraphQL API from these types of attacks. [Read more on configuring the operation limits](/docs/gateway/configuration/operation-limits).

## Depth

Limits the deepest nesting of selection sets in an operation, including fields in fragments.

Here's how depth is calculated:

```graphql

query GetProduct {

product(id: "123") {

# depth 1

title # depth 2

brand {

name # depth 3

}

}

}

```

To configure depth, add the following to your `grafbase.toml` file:

```toml

[operation_limits]

depth = 3

```

## Height

Limits the number of unique fields included in an operation, including fields of fragments. If a particular field is included multiple times via aliases, it's counted only once.

Here's how height is calculated:

```graphql

query GetProduct {

product(id: "123") {

# height 1

id # height 2

name # height 3

title: name # aliases don't count

}

}

```

To configure height, add the following to your `grafbase.toml` file:

```toml

[operation_limits]

height = 20

```

## Aliases

Limits the total number of aliased fields in an operation, including fields of fragments.

Here's how aliases are calculated:

```graphql

query GetProduct {

product(id: "123") {

title: name # alias 1

something: name # alias 2

else: name # alias 3

}

}

```

To configure aliases, add the following to your `grafbase.toml` file:

```toml

[operation_limits]

aliases = 10

```

## Root Fields

Limits the number of root fields in an operation, including root fields in fragments. If a particular root field is included multiple times via aliases, each usage is counted.

Here's how root fields are calculated:

```graphql

query GetProducts {

topBooks {

# root field 1

id

}

topMovies {

# root field 2

id

}

topGames {

# root field 3

id

}

}

```

To configure root fields, add the following to your `grafbase.toml` file:

```toml

[operation_limits]

root_fields = 10

```

## Complexity

Complexity takes the number of fields as well as the depth and any pagination arguments into account. Every scalar field adds 1 point, every nested field adds 2 points, and every pagination argument multiplies the nested objects score by the number of records fetched.

Here's how root fields are calculated:

```graphql

query {

# total: 18

products(limit: 2) {

# (Nested: 2 + 1 + 1 + 1 + (author: 2 + 1 + 1)) * limit: 2 = 18

id # scalar: 1

title # scalar: 1

price # scalar: 1

brand {

# nested: 4 (2 + 1 + 1)

id # scalar: 1

name # scalar: 1

}

}

}

```

To configure complexity, add the following to your `grafbase.toml` file:

```toml

[operation_limits]

complexity = 100

```

---

# Gateway - Security - Complexity control

GraphQL queries offer lots of flexiblity to build the queries you need. But

this flexibility can be abused, causing excess load on downstream servers.

[Operation limits][op-limits] allow users to set a high water mark on many of

the properties of a GraphQL query. But they are quite a blunt tool - not all

subgraphs have the same performance characteristics and even within a subgraph

not all fields neccesarily cause the same load.

That's where complexity control comes in: it allows you to set an overall

complexity limit in the Grafbase Gateway, but leaves the definition of how

complex each field is up to the developers of the subgraphs.

Read more on [configuring the complexity control][config].

## Configuring Complexity

It's up to each individual subgraph to define the compleixty of it's fields.

This can be acheived with two directives: `@cost` & `@listSize`

### Field Cost

The `@cost` directive is defined as such:

```graphql

directive @cost(

weight: Int!

) on ARGUMENT_DEFINITION | ENUM | FIELD_DEFINITION | INPUT_FIELD_DEFINITION | OBJECT | SCALAR

```

This directive can be provided on a field, argument, or type. When provided on

a field or argument it sets the cost of that field or argument appearing in a

query. When provided on a type it sets the cost of a field of that type

appearing in a query. If an individual field has a cost then that will be

override any cost set on the type of that field.

If no cost directive can be found for a particular field or it's type, then a

default cost will be applied. If the field is of a scalar type, then its cost

is assumed to be zero. If the field is of an object type, then its default

cost is 1.

### List Size

The `@listSize` directive is defined as such:

```graphql

directive @listSize(

assumedSize: Int

slicingArguments: [String!]

sizedFields: [String!]

requireOneSlicingArgument: Boolean = true

) on FIELD_DEFINITION

```

This directive controls the size that we assume each list field has. In brief

it's arguments are:

- `assumedSize` if provided sets the size that we assume this list is.

- `slicingArguments` says that the given arguments to this field define the

length of the list.

- `sizedFields` can be used on connection fields that are following the [GraphQL

cursor specification][cursor-spec] to indicate which subfields of the current

field are controlled by the slicing arguments on this field.

- `requireOneSlicingArgument` can be set when slicing arguments is also set.

If set an error will be raised if we receive a query for this field that

doesn't have exactly one slicing argument provided. This argument defaults

to true, but if slicingArguments is not provided it is not used.

For more details you can read the detailed specification of `@listSize` in the

[Cost Directive Specification][cost-spec].

## Complexity Calculation

The complexity score of an operation is calculated by walking the query, and

summing up the cost of each individual field. Fields are assigned a cost

according to the cost of the field or the type of the field, plus the cost of

all their children. If the field in question is a list then its cost is

multiplied by the expected size of the list.

For example this query would be calculated as:

```graphql

query {

# (self + children) * listSize = (1 + 1) * 4 = 8

products(limit: 4) {

id # scalar: 0

title # scalar: 0

price # scalar: 0

author {

# object: 1

id # scalar: 0

name # scalar: 0

}

}

}

```

[op-limits]: /docs/gateway/security/operation-limits

[cost-spec]: https://ibm.github.io/graphql-specs/cost-spec.html#sec-The-List-Size-Directive

[cursor-spec]: https://relay.dev/graphql/connections.htm

[config]: /docs/gateway/configuration/complexity-control

---

# Gateway - Security - Message Signatures

The Grafbase Gateway can sign subgraph HTTP requests following [RFC 9421][rfc9421]. Read more on [configuring message signatures][config].

## Keys

A key file should be provided in the config. This key file should be one of:

1. A JSON file containing a JWK.

2. A PEM file containing a PKCS#8 private key.

### Algorithms

We'll choose which algorithm to use based on the key file provided, but a

specific algoithm can be provided in the configuration.

The available algorithms are:

- `hmac-sha256`

- `ed25519`

- `ecdsa-p256-sha256`

- `ecdsa-p384-sha384`

If the provided key & algorithm don't match the gateway will refuse to start.

The algorithm you use for singing can have an impact on the latency of your

subgraph requests. The list above is in performance order, from most

performant to least performant. We recommend testing your chosen algorithm &

settings if this is a concern - a message signing span will be output in

tracing that can be used to determine the impact of your settings.

## Controlling Signing

The Grafbase Gateway allows you to control which parts of a subgrah request are

used as input to message signing. There are several settings for this:

- The `headers` key can control which headers are included or excluded. It has

two sub-keys:

1. `include` should be a list of headers to include in the signature.

If not present, all headers will be included.

2. `exclude` should be a list of headers to exclude from the signature.

This setting takes precedence over `include`

- The `derived_components` key allows you to control which "derived

components" are included. This defaults to `["request_target"]`. The

following options are available:

- `method` the HTTP method.

- `target_uri` the full URL of the request

- `authority` the hostname of the requests target URL

- `scheme` the scheme of the requests target URL

- `request_target` the [request target][request-target] of the request.

- `path` the absolute path of the request URL

- The `signature_parameters` key is a list of additional signature parameters

to include. It currently only has one setting:

- `nonce` can be provided to include a random nonce in every requests

signature.

- `expiry` can be set to a duration string. (e.g. `"30s"` for 30 seconds). If

provided, signatures will expire after this duration.

Here is an example of these settings:

[config]: /docs/gateway/configuration/message-signatures

[rfc9421]: https://datatracker.ietf.org/doc/html/rfc9421

[request-target]: https://datatracker.ietf.org/doc/html/rfc9421#name-request-target

---

# Gateway - Security - Authentication

Authentication extensions are available in the [Marketplace](/extensions):

- [JWT](/extensions/jwt): Validates a JWT token

You can learn how authentication extensions work and build your own with this follow along tutorial: [Customize your GraphQL Federation authentication and authorization with Grafbase Extensions](/blog/custom-authentication-and-authorization-in-graphql-federation).

A complete example can be found on [GitHub](https://github.com/grafbase/grafbase/tree/main/examples/authorization) and the [Grafbase SDK](https://docs.rs/grafbase-sdk/latest/grafbase_sdk/) is the extension reference.

---

Authorization extensions are available in the [Marketplace](/extensions):

- [Authenticated](/extensions/authenticated): Restrict access to unauthenticated clients.

- [Requires Scopes](/extensions/requires-scopes): Grant access only to clients with appropriate OAuth scopes.

You can learn how authorization extensions work and build your own with this follow along tutorial: [Customize your GraphQL Federation authentication and authorization with Grafbase Extensions](/blog/custom-authentication-and-authorization-in-graphql-federation).

A complete example can be found on [GitHub](https://github.com/grafbase/grafbase/tree/main/examples/authorization) and the [Grafbase SDK](https://docs.rs/grafbase-sdk/latest/grafbase_sdk/) is the extension reference.

---

# Gateway - Security - Access Logs

Track the activity of the Grafbase Gateway with access logs. These logs require custom configuration and definition through gateway hooks, unlike system logs. Collect data about request execution and response at three points in the request lifecycle: [after a subgraph request](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/trait.Hooks.html#method.on_subgraph_response), [after an operation](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/trait.Hooks.html#method.on_operation_response), and [right before sending a response to the user](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/trait.Hooks.html#method.on_http_response).

First, check out our [hooks guide](/guides/implementing-gateway-hooks) to learn the basics of custom hook implementation.

## Usage

Enable access logs in the Gateway configuration:

```toml

[gateway.access_logs]

enabled = true

path = "/path/to/logs"

```

[Read more](/docs/gateway/configuration/gateway#access-logs) on configuration options.

With access logs enabled, invoking the [`host_io::access_log::send`](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/host_io/access_log/fn.send.html) function will append the specified bytes to a file called `access.log` in the configured path.

The first two hooks can return a set of bytes. The return values of [`on_subgraph_response` hooks](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/trait.Hooks.html#method.on_subgraph_response) appear in the [`ExecutedOperation`](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/struct.ExecutedOperation.html#structfield.on_subgraph_response_outputs) of the [`on_operation_response` hook](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/trait.Hooks.html#method.on_operation_response), and the outputs of `on_operation_response` hooks are in the [`ExecutedHttpRequest`](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/struct.ExecutedHttpRequest.html#structfield.on_operation_response_outputs) of the [`on_http_response` hook](https://docs.rs/grafbase-hooks/latest/grafbase_hooks/trait.Hooks.html#method.on_http_response).

## Metrics

The [metrics counter](/docs/gateway/telemetry/metrics-attributes#pending-access-logs) `grafbase.gateway.access_log.pending` increments with each `log-access` call and decrements once the bytes are written to the `access.log`. Monitoring this value is crucial. Each `access_log::send` call consumes memory until data gets written, and the channel can hold a maximum of 128,000 messages. For the `blocking` access log method, a full channel will block all `access_log::send` calls, while the `non-blocking` method returns errors, sending data back to the caller.

## Implementing the Hooks in Rust

Use the [hooks guide](/guides/implementing-gateway-hooks) as the basis for the access logs implementation. The template project is a Rust project with the necessary dependencies and build instructions to compile the project.

The `Cargo.toml` file provides the dependencies and build instructions to compile the project:

```toml

[package]

name = "my-hooks"

version = "0.1.0"

edition = "2021"

license = "MIT"

[dependencies]

grafbase-hooks = { version = "*", features = ["derive"] }

[lib]

crate-type = ["cdylib"]

[profile.release]

codegen-units = 1

opt-level = "s"

debug = false

strip = true

lto = true

```

You need to implement all the response hooks:

```rust

use grafbase_hooks::{

grafbase_hooks, SharedContext, ExecutedHttpRequest,

ExecutedOperation, ExecutedSubgraphRequest, Hooks,

};

struct MyHooks;

#[grafbase_hooks]

impl Hooks for MyHooks {

fn new() -> Self

where

Self: Sized,

{

Self

}

fn on_subgraph_response(

&mut self,

context: SharedContext,

request: ExecutedSubgraphRequest

) -> Vec {

Vec::new()

}

fn on_operation_response(

&mut self,

context SharedContext,

operation: ExecutedOperation

) -> Vec {

Vec::new()

}

fn on_http_response(

&mut self,

context: SharedContext,

request: ExecutedHttpRequest

) { }

}

grafbase_hooks::register_hooks!(Component);

```

The implementation is the simplest possible and doesn't really do anything.

We start building the access log row in the subgraph response handler. By using the `postcard` and `serde` crates to (de-)serialize the data:

```rust

#[derive(serde::Serialize, serde::Deserialize)]

pub struct SubgraphInfo<'a> {

pub subgraph_name: &'a str,

pub method: &'a str,

pub url: &'a str,

pub has_errors: bool,

pub cached: bool,

}

#[derive(serde::Serialize, serde::Deserialize)]

pub struct OperationInfo<'a> {

pub name: Option<&'a str>,

pub document: &'a str,

pub subgraphs: Vec>,

}

#[derive(serde::Serialize, serde::Deserialize)]

pub struct AuditInfo<'a> {

pub method: &'a str,

pub url: &'a str,

pub status_code: u16,

pub operations: Vec>,

}

```

The first three hooks manage aggregation of the data, and the last hook writes the data to the log file:

```rust

#[grafbase_hooks]

impl Hooks for MyHooks {

fn new() -> Self

where

Self: Sized,

{

Self

}

fn on_subgraph_response(

&mut self,

_: SharedContext,

request: ExecutedSubgraphRequest

) -> Vec {

let info = SubgraphInfo {

subgraph_name: &request.subgraph_name,

method: &request.method,

url: &request.url,

has_errors: request.has_errors,

cached: matches!(request.cache_status, CacheStatus::Hit),

};

postcard::to_stdvec(&info).unwrap()

}

fn on_operation_response(

&mut self,

_: SharedContext,

operation: ExecutedOperation

) -> Vec {

let info = OperationInfo {

name: request.name.as_deref(),

document: &request.document,

subgraphs: request

.on_subgraph_response_outputs

.iter()

.filter_map(|bytes| postcard::from_bytes(bytes).ok())

.collect(),

};

postcard::to_stdvec(&info).unwrap()

}

fn on_http_response(

&mut self,

_: SharedContext,

request: ExecutedHttpRequest

) {

let info = AuditInfo {

method: &request.method,

url: &request.url,

status_code: request.status_code,

operations: request

.on_operation_response_outputs

.iter()

.filter_map(|bytes| postcard::from_bytes(bytes).ok())

.collect(),

};

grafbase_hooks::host_io::access_log::send(&serde_json::to_vec(&info).unwrap()).unwrap();

}

}

```

This code performs three main tasks:

1. Serializes information from each subgraph call into a `SubgraphInfo` struct.

2. Creates an `OperationInfo` struct from each operation call and combines related subgraph calls into it.

3. Builds an `AuditInfo` struct from each HTTP request and combines related operation calls into it.

The last hook calls the `access_log::send` method to serialize the final result to JSON, written to the access log.

The serialization of data can vary as long as it returns bytes. As an example, if your access.log contains JSON data, define structured data as [Serde](https://serde.rs/) structures in the hooks and serialize them into bytes using [serde_json](https://crates.io/crates/serde_json).

See a [full example project](https://github.com/grafbase/grafbase/tree/main/examples/access-logs) implementing access logs with Grafbase Gateway.

---

# Gateway - Security - Access Tokens

Access tokens grant access to Grafbase services and APIs. Use them to manage your account, organization, and graphs.

## Personal Access Tokens

Use personal access tokens to grant access for the [Grafbase CLI](/docs/grafbase-cli) and Management API.

### Scopes

Each access token has a specific scope that grants access to certain account and organization settings.

#### Full Access

Full access tokens manage all account and project settings for your personal account and any organizations you belong to.

#### Personal

These tokens manage only your own account and organization(s).

#### Organization

These tokens manage only the selected organization and its graphs. If you belong to multiple organizations, you will see them in the list of scopes.

### Create a Personal Access Token

Create access tokens from your [account settings > access tokens](https://app.grafbase.com/settings/access-tokens) page.

Give your access token a name and select a scope.

You cannot modify or read access tokens after creation. Copy the token to a secure location and never share it with anyone.

### Revoke Access Tokens

Tokens do not expire. Revoke them when you no longer need them.

Revoke tokens anytime from your [account settings > access tokens](https://app.grafbase.com/settings/access-tokens) page.

## Organization Access Tokens

Organization access tokens grant access for the Grafbase Gateway telemetry and Graph Delivery Network. These tokens belong to an organization, so they continue working even if you remove a user from the organization.

### Scopes

Each access token has a specific scope that grants access to certain organization and graph settings.

#### All Graphs

All Graphs tokens let you use the Grafbase Gateway to access all graphs within the organization.

#### Specific Graphs

Use these tokens to limit the Grafbase Gateway to access only certain graphs.

### Create an Organization Access Token

Create access tokens from your `organization settings > access tokens` page.

Give your access token a name and select the graph(s).

You cannot modify or read access tokens after creation. Copy the token to a secure location and never share it with anyone.

### Revoke Access Tokens

Tokens do not expire. Revoke them when you no longer need them.

Revoke tokens anytime from your `organization settings > access tokens` page.

---

# Gateway - Arguments

The Grafbase Gateway accepts command line arguments to configure its operations. Specify the gateway's behavior using these arguments. Run `grafbase-gateway --help` to see all available options.

## Listen Address

**Argument**: `-l, --listen-address `

The IPv4 or IPv6 address and port to listen on. Default is `127.0.0.1:5000`.

## Graph Ref

**Argument**: `-g, --graph-ref `

The graph reference to fetch from the Grafbase API, following the format `graph@branch`. Branch can be omitted to use the production branch. The gateway checks for graph changes every ten seconds. Cannot be used with the `--schema` option.

## Schema

**Argument**: `-s, --schema `

The path to the federated schema file. Use this option to run the gateway in air-gapped mode. Cannot be used with the `--graph-ref` option.

The gateway checks for schema changes every five seconds. If the file changes, it reloads the file, creates a new engine; and if configured, warms the operation cache.

## Config

**Argument**: `-c, --config `

The path to the TOML configuration file.

## Log Level

**Argument**: `--log `

Sets the logging level and controls the detail for all spans, logs and trace events.

In production, use only `off`, `error`, `warn`, and `info` levels. More verbose levels like `debug` include sensitive information such as request variables and responses.

Setting the level to `off` or `error` prevents the gateway from sending traces to the OpenTelemetry collector.

You can use these values: `off`, `error`, `warn`, `info`, `debug`, `trace`, or a custom string. Custom strings pass directly to [`tracing_subscriber::EnvFilter`] for debugging only. Grafbase makes no guarantees about the format stability.

The default level is `info`.

## Log Style

**Argument**: `--log-style `

Select a log style format for the gateway. Choose `pretty` for human-readable logs, `json` for machine-readable logs, or `text` for black and white logs when you pipe standard output to a file. Grafbase uses `pretty` as the default style.

## Hot Reload

**Argument**: `--hot-reload`

Enables hot reloading of the gateway configuration. This option applies to configuration sections that can change without a gateway restart.

## Help

**Argument**: `-h, --help`

## Version

**Argument**: `-V, --version`

---

# Gateway - Configuration - Authentication

## Default behavior

The default behavior of the gateway depends on whether any authentication is configured or not. When there isn't, the gateway will provide an anonymous token for each request.

On the other hand, if there is, whether it's an extension or the deprecated embedded jwt, the gateway will deny access if the user could not be authenticated.

This can be controlled with the following:

```toml

[authentication]

# If the client could not be authenticated

# Deny access

default = "deny"

# or grant an anonymous token

defualt = "anonymous"

```

## Extensions

Authentication extensions are available in the [Marketplace](/extensions):

- [JWT](/extensions/jwt): Validates a JWT token

You can learn how authentication extensions work and build your own with this follow along tutorial: [Customize your GraphQL Federation authentication and authorization with Grafbase Extensions](/blog/custom-authentication-and-authorization-in-graphql-federation).

A complete example can be found on [GitHub](https://github.com/grafbase/grafbase/tree/main/examples/authorization) and the [Grafbase SDK](https://docs.rs/grafbase-sdk/latest/grafbase_sdk/) is the extension reference.

## Deprecated embedded JWT

The Grafbase Gateway has an embedded JWT authentication implementation, with the same configuration as the [JWT](/extensions/jwt) extension.

```toml

[[authentication.providers]]

[authentication.providers.jwt]

name = "my-authenticator"

[authentication.providers.jwt.jwks]

url = "https://example.com/.well-known/jwks.json"

issuer = "example.com"

audience = "my-project"

poll_interval = 60

[authentication.providers.jwt.header]

name = "Authorization"

value_prefix = "Bearer "

```

- The `name` field specifies the name of the authenticator.